The Google Quantum AI team this week announced an achievement that many experts considered the Holy Grail of modern technology: surpassing the limit of quantum error. The technical details of this breakthrough demonstrate, for the first time, that it is possible to build a quantum computer in which precision increases as the system grows, finally solving the instability problem that has hampered this technology for decades.

Until now, quantum computers were described as “noisy” machines. You qubits (bits quantum) are extremely sensitive; any slight variation in temperature or vibration causes errors in the calculations, a phenomenon known as decoherence. The great difficulty lay in the fact that, when trying to protect or increase the information to be processed, adding more qubitsthe system became so complex that it ended up generating even more failures.

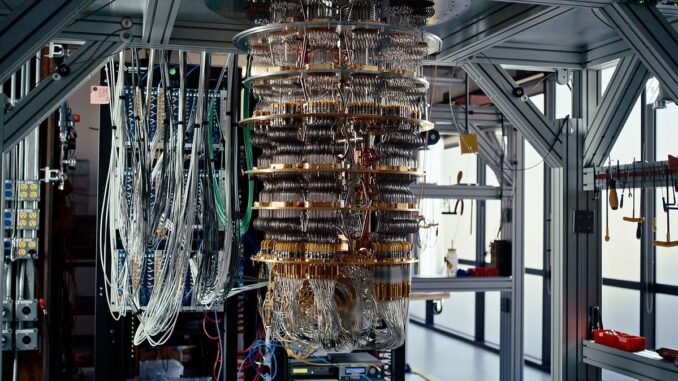

Google managed to reverse this logic by correcting surface errors (surface code). This technique works like a mesh or a chessboard of qubits physical. In this structure, the qubits of data are interspersed with “qubits “measurement” auxiliaries. These neighbors constantly monitor each other’s state without “looking” directly at them – which would destroy the quantum state of uncertainty. If an error occurs at one point in the mesh, the system detects the discrepancy through this surveillance network and automatically corrects it.

By expanding this mesh, the team demonstrated that a “qubit logical” – a group of many qubits physicists working in unison – can be more stable than its individual components. Thus, the larger this “network” (the more qubits), the more rigorous the calculations are. This is the moment when protection against errors overcomes the natural tendency towards chaos.

The world opens

The practical importance of this advance is difficult to overestimate. With this milestone, we officially enter the era of Fault-Tolerant Quantum Computing. This means that, instead of just having prototypes for academic tasks, we are on the way to machines capable of running complex algorithms for hours or days without failing.

This opens the door to things like molecular simulation, where we can design new materials and medicines at the atomic level, simulating chemical interactions that the world’s most powerful classical supercomputers cannot process. Or the ability to optimize complex chemical processes to lead to the creation of much more efficient fertilizers – as well as the development of batteries with revolutionary storage capabilities.

A global update to security protocols will also be required, forcing the adoption of post-quantum cryptography to protect data against attacks.

Although there is still some time before we see these machines in commercial data centers, the way is opening for a quantum industrial future of computing that is no longer merely theoretical.

Leave a Reply